The Art of Prompting: Utilize LLMs

Learn how to communicate effectively with LLMs to unlock their full potential for productivity, creativity, and problem-solving.

Large Language Models (LLMs) are evolving the way we interact with technology, and understanding how to effectively prompt them is crucial to unlocking their full potential.

In this article, we’ll delve into the fundamentals of LLMs, explore the basics of prompt engineering, and preview the future of AI assistants.

How LLMs Work

Since the 1950s, researchers and scientists have been working towards enabling computers to understand, interpret, and generate human language, laying the foundation for the fascinating domain of Natural Language Processing (NLP).

Various advancements in computing over the last few decades — including machine learning, neural networks, cloud computing, and vast datasets — have significantly improved their language understanding, paving the way for powerful language models.

A pivotal moment occurred in 2017 with the introduction of transformer architectures and self-attention mechanisms. This breakthrough redefined the capabilities of language models, enabling them to understand the context and relevance between words in unprecedented ways.

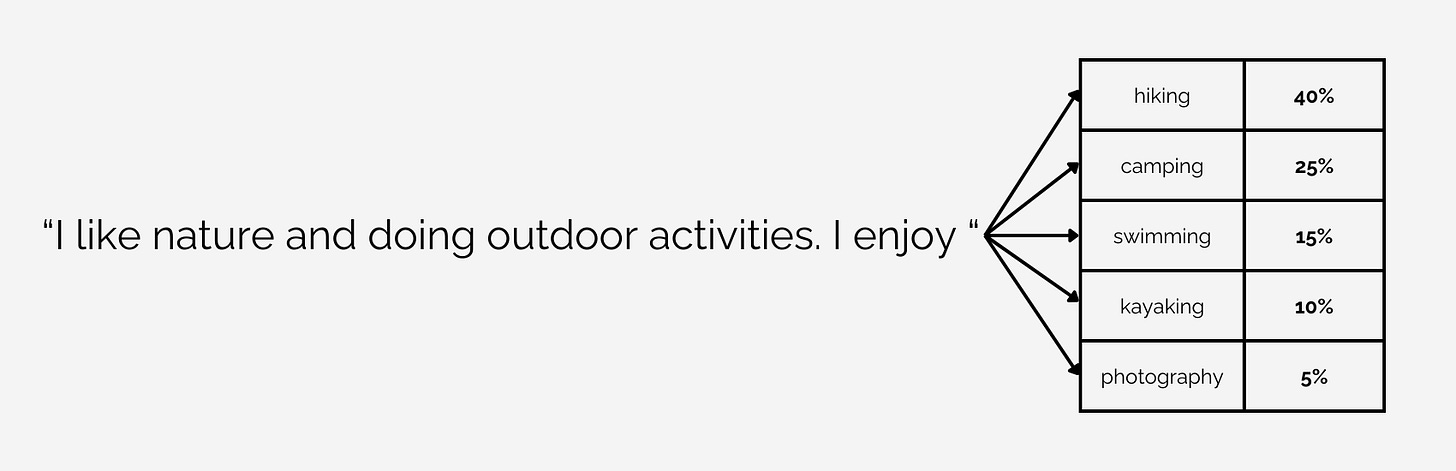

Fundamentally, LLMs are highly sophisticated word predictors. They excel at predicting the next word in a sequence based on the vast amount of text they've been trained on, and their inherent probabilistic nature means that even with the same prompt, the output will vary slightly each time.

Crafting Effective Prompts

While today's LLMs can generate fluent, human-like text with ease, their brilliance has a blind spot: they rely on making assumptions based on patterns in their training data to generate responses. This can lead to potetial misinterpretations, incomplete results, and even fabricated information (known as “hallucinations”).

Prompt engineering offers a set of valuable techniques designed to maximize the effectiveness of LLMs and mitigate these risks. It’s the science of constructing the initial prompt to enhance the model’s understanding and guide it towards generating the desired output, essentially reducing the need for assumptions.

The field of prompt engineering is extensive and rapidly evolving, with new techniques and approaches emerging constantly. It's definitely worthwhile to explore and experiment to discover what works best for you. Here, we’ll focus on some basic but powerful methods to get you started:

Mastering the Art of Instruction

Clear and detailed instructions are essential for guiding the LLM to produce a desired output. Here’s a breakdown of key elements to include in your prompts:

Define the Task (“What do I want?”)

State the task using actionable verbs and descriptive adjectives in a conversational tone.

Be clear and precise to minimize ambiguity.

"Write an inspiring and informative article about the positive impact of community gardens on urban sustainability."Identify the Audience (“Who am I?”)

Specify the intended audience to tailor the language and tone.“Explain climate change to a 5 year old.” vs. “Explain climate change to a scientist.”Adopt a Persona (“Who are you?”)

Instruct the LLM to adopt a specific perspective or voice."Write a poem from the perspective of a tree." or "Create a dialogue between two historical figures."Provide Context (“What should be considered?”)

Offer relevant background information or guidelines."Write a news article about a recent development in urban sustainability, focusing on its impact on affordable housing."Set the Format (“How do I want it?”)

Define the desired structure or style of the output."Summarize the key points in bullet points" or "Write a persuasive essay in five paragraphs."Establish Constraints (“What do I NOT want?”)

Explicitly state any restrictions, exclusions, or limitations in a separate line within your prompt.Task: "Write an engaging blog post about the benefits of cycling for urban commutersthat doesn’t mention any environmental benefits." Constraint: "Do not mention any environmental benefits."

Advanced Formatting Techniques

Strategic and consistent formatting help the model parse your instructions accurately; it can significantly enhance the quality and relevance of outputs, especially for more complicated tasks. Consider these techniques:

Capitalization: Use ALL CAPS to emphasize keywords or phrases.

Line Breaks (\n): Separate distinct parts/ideas with new lines.

Bullet Points: List multiple items or steps in an organized manner.

Numbers/Letters: Enumerate steps or options for structure.

Quotes (" "): Denote direct speech, citations, or specific terms.

Parentheses (()): Provide additional information or clarification.

Square Brackets ([]): Create placeholders for information the LLM should fill in.

Angle Brackets (<>): Indicate variables or parameters.

Curly Brackets ({}): Denote specific data types or formats.

Zero-Shot and Few-Shot Prompting: The Power of Examples

One of the most powerful ways to improve LLM output is to provide examples, demonstrating the desired format, style, or content you’re looking for.

Zero-Shot Prompting: This approach, where you provide a single instruction without examples, is often sufficient for straightforward tasks where the desired output is clear and doesn’t require a specific structure or style.

Few-Shot Prompting: This technique involves providing a few examples of the desired output along with your instruction. It’s particularly useful when you need to:

Establish a specific format, style, or tone.

Guide the LLM’s creativity within certain boundaries.

Ensure consistency across responses in the conversation.

Handle nuanced or domain-specific tasks.

By providing a few well-crafted examples, you essentially give the LLM a blueprint for the desired output, enabling it to better align with your expectations and produce more tailored and effective results.

Here’s a practical example for a structured brainstorming session:

Task: I need help brainstorming approaches to improve public transportation and reduce car usage in our city. Please use the following format for each response:

Observation: [Your observation about a current challenge related to public transportation or car usage]

Proposed Solution: [A specific, actionable solution to address the observation]

Key Benefit: [The primary advantage of implementing this solution]

Potential Concerns: [Acknowledge any negative effects or consequences]Prompting the Future with AI Assistants

A well-crafted prompt can often provide what you need, especially when you have a clear understanding of the task and relevant details. However, for open-ended or complex problems, starting with a shorter and focused prompt is a powerful strategy. Engaging in a conversation allows you to explore ideas, clarify ambiguities, and refine your approach. This intuitive, iterative process enhances our ability to utilize vast amounts of information.

Evolution of the LLM Agent

Planning and Reasoning: Advanced prompting techniques like Chain of Thought prompting break down complex tasks into smaller, manageable steps, mirroring human problem-solving. This enables them to tackle intricate challenges that a single prompt could not address effectively. Additionally, techniques like Reinforcement Learning from Human Feedback (RLHF) leverage human input to refine the model’s reasoning ability.

Memory: LLMs utilize memory to process information and make decisions. The LLM’s context window, its short-term memory, determines how much of the ongoing conversation it can “remember”. Long-term memory techniques like embeddings (vector representations of words and phrases) and vector storage allow LLM agents to remember information beyond the immediate context and produce coherent responses over extended conversations.

Tool Usage: Building upon their reasoning and memory capabilities, LLMs can leverage a wide range of tools like search engines, specialized databases, and even code interpreters. This expanded toolkit enables them to perform tasks like fact-checking, complex data analysis, up-to-date information retrieval, and even generating visual representations of data, making them even more versatile and valuable in diverse applications.

LLM agents are rapidly transforming into capable collaborators, progressively learning to understand our intentions, reason through complex tasks, and take appropriate actions independently. Along with advancements in image, video, and audio generation, we’re witnessing the rise of AI assistants seamlessly integrate into our work and daily lives, opening up a world of possibilities for increased productivity and creativity.

By better understanding the fundamentals of these techniques and the evolving capabilities of LLMs, we can continuously adapt to minimize the gap between technology and user needs, empowering us to realize the full potential of this transformative technology.